Introduction

In empirical research, the reliability of measurement instruments is a critical determinant of data quality. Whether a study focuses on students’ motivation, consumer behaviour, or investors’ financial attitudes, researchers must ensure that all questionnaire items consistently measure the same construct. One of the most widely accepted indicators of such internal consistency is Cronbach’s Alpha (α), introduced by Lee Cronbach in 1951. It estimates the degree to which a group of items collectively represents a single latent variable.

As research increasingly relies on survey-based instruments, Cronbach’s Alpha serves as a foundation for assessing reliability across disciplines such as education, psychology, business, healthcare, and finance. A reliable scale ensures that any variations in responses reflect actual differences among participants rather than inconsistencies within the measurement tool itself. Hence, Cronbach’s Alpha remains indispensable for verifying that data are both credible and replicable.

Understanding Cronbach’s Alpha

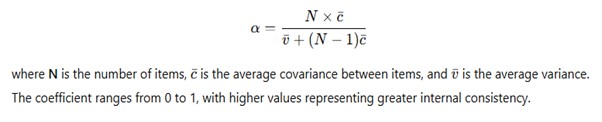

Cronbach’s Alpha calculates the average inter-item correlation across all items in a scale, determining the consistency of their relationships. It is expressed as:

Standard benchmarks used by researchers include:

α ≥ 0.9: Excellent reliability

0.8 ≤ α < 0.9: Good reliability

0.7 ≤ α < 0.8: Acceptable reliability

0.6 ≤ α < 0.7: Questionable reliability

α < 0.6: Poor reliability

While there is no universal cut-off, an alpha value below 0.7 typically suggests that the instrument may need refinement. However, the interpretation should always consider the construct being measured and the context of the research.

What Happens When Cronbach’s Alpha is Less than 0.6?

When the value of Cronbach’s Alpha falls below 0.6, it generally signals poor internal consistency, meaning that the items may not be measuring the same underlying construct effectively. Several possibilities could explain this result:

- Some items may be ambiguous or misinterpreted by respondents.

- The items may represent different dimensions rather than a single variable.

- There may be too few items to produce stable correlations.

- Respondents may have given inconsistent or random responses due to unclear wording or survey fatigue.

A low alpha suggests that the scale’s reliability is insufficient for drawing dependable conclusions. In such cases, researchers typically review the questionnaire’s content, remove weak items, or conduct further testing before using the data for hypothesis testing or inferential analysis.

How to Improve Cronbach’s Alpha

Improving a low Cronbach’s Alpha involves several systematic steps:

- Eliminate poorly correlated items: Examine the “Item-Total Statistics” table in software such as SPSS to identify questions that reduce reliability. Removing those items can increase α.

- Rephrase ambiguous questions: Items with confusing wording or double meanings should be rewritten to ensure that respondents interpret them consistently.

- Add more relevant items: Increasing the number of well-constructed items that reflect the same construct can enhance reliability.

- Maintain clarity and simplicity: Avoid complex phrasing, double-barrelled questions, or negatively worded statements, as they can distort responses.

- Conduct pilot testing: Before large-scale data collection, testing the questionnaire with a smaller group helps identify problematic items and calculate an initial Cronbach’s Alpha for refinement.

Through such adjustments, the reliability coefficient can be gradually improved to reach an acceptable level (typically above 0.7), thereby ensuring consistency across responses.

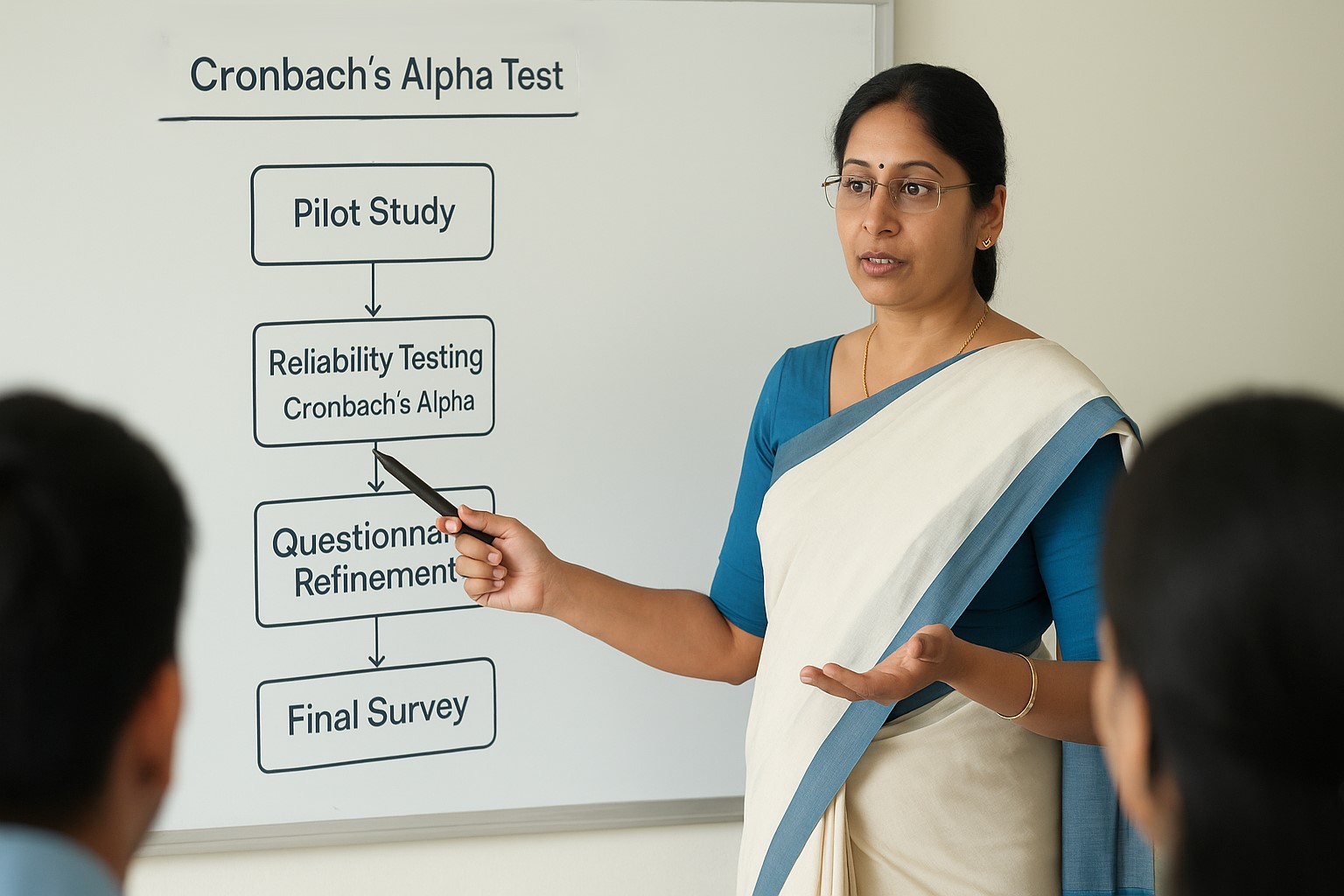

Cronbach’s Alpha in Pilot Studies for Reliability Analysis

Pilot studies are a crucial preliminary step in any quantitative research project. They allow researchers to evaluate the clarity, structure, and consistency of questionnaire items before full-scale administration. In this context, Cronbach’s Alpha is the primary tool used to assess the internal consistency of the scale during the pilot phase.

For instance, a researcher developing a 25-item questionnaire on “Digital Financial Literacy” may distribute it to a small sample of 40–50 respondents. The resulting Cronbach’s Alpha value helps determine whether the items function cohesively. If α = 0.78, the scale can be considered reliable for the main survey. However, if α = 0.55, the researcher must revise or remove weak items. Thus, pilot testing with Cronbach’s Alpha acts as an early reliability filter, ensuring that only dependable instruments are deployed in the final study.

Importantly, pilot studies also reveal whether items are culturally appropriate, easily understood, and free from bias — factors that indirectly influence reliability scores.

Applicability of Cronbach’s Alpha: Focus on Likert Scale Statements

Cronbach’s Alpha is most suitable for Likert-type scales, where responses are measured on an ordinal continuum (e.g., 1 = Strongly Disagree to 5 = Strongly Agree). Such items are designed to assess attitudes, opinions, or perceptions across multiple dimensions of a construct. The underlying assumption is that all items contribute equally to measuring a single latent variable, which makes them ideal for internal consistency analysis.

However, Cronbach’s Alpha is not appropriate for all types of data. It should not be used for:

- Dichotomous items (yes/no, true/false), where Kuder-Richardson formulas (KR-20 or KR-21) are more suitable.

- Open-ended questions or qualitative responses.

- Single-item measures, as alpha requires inter-item correlations.

Thus, Cronbach’s Alpha is best applied when researchers use Likert-scale items that collectively represent a unified construct, such as satisfaction, motivation, or behavioural intention.

Applications across domains of study

Educational Research:

In education, Cronbach’s Alpha validates instruments measuring learning motivation, academic engagement, or teaching effectiveness. For example, an α value of 0.84 for a student motivation scale confirms that all items consistently assess the same aspect of learning engagement.

Psychology and Behavioural Sciences:

In psychology, it ensures reliability in scales like the Beck Depression Inventory (α > 0.90), confirming that all items consistently measure depressive symptoms.

Marketing and Consumer Research:

Cronbach’s Alpha validates consumer perception and brand trust scales. A marketing study showing α = 0.88 for customer satisfaction indicates cohesive measurement of customer experience dimensions.

Healthcare Studies:

Patient satisfaction and health quality assessments often use Cronbach’s Alpha (e.g., α = 0.86) to confirm consistent measurement of well-being or service quality across multiple items.

Finance and Technology:

In financial behavior and FinTech adoption research, Cronbach’s Alpha (e.g., α = 0.83) ensures the reliability of constructs like investment confidence, perceived risk, and digital trust.

Interpreting and Using Cronbach’s Alpha Responsibly

Although Cronbach’s Alpha is a reliable measure of internal consistency, it should not be interpreted mechanically. A high alpha does not guarantee that a scale is unidimensional or valid; it only confirms that items are interrelated. Researchers must therefore combine Cronbach’s Alpha with factor analysis (if required), theoretical validation, and expert review to confirm construct validity. Furthermore, reliability coefficients may vary across populations and contexts, underscoring the importance of continuous testing and refinement in different samples.

Conclusion

Cronbach’s Alpha remains a cornerstone in the reliability assessment of research instruments across disciplines. When properly applied, it ensures that multi-item scales produce consistent, replicable, and interpretable data. However, researchers must remain cautious—values below 0.6 call for revision, and pilot studies play an essential role in identifying and correcting weak items before the main data collection. Moreover, the test’s applicability primarily lies in Likert-scale instruments, where attitudes and perceptions are measured systematically.

By integrating Cronbach’s Alpha into pilot testing and final analysis, researchers can establish the internal reliability of their tools and enhance the overall credibility of their empirical findings. In doing so, they uphold the fundamental principle of scientific inquiry, that conclusions must rest upon reliable, consistent, and valid measures of reality.

References

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334.

Field, A. (2018). Discovering Statistics Using IBM SPSS Statistics. Sage Publications.

Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s Alpha. International Journal of Medical Education, 2, 53–55.

Gliem, J. A., & Gliem, R. R. (2003). Calculating, interpreting, and reporting Cronbach’s Alpha reliability coefficient for Likert-type scales. Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric Theory (3rd ed.). McGraw-Hill.

Leave a Reply